12,000+ API Keys and Passwords Found in Public Datasets: A Growing Security Threat

In today’s digital world, data security is more crucial than ever. However, a recent discovery has raised significant concerns—over 12,000 API keys and passwords have been found exposed in public datasets used for training Large Language Models (LLMs).

This alarming exposure comes from Common Crawl, an open-source web scraping project that collects data from across the internet. But how does this happen? And what are the risks involved? Let’s break it down.

Common Crawl: The Internet's Dumpster Diver Imagine a tool that scans the internet, collecting massive amounts of data—web pages, GitHub repositories, forums, and more. That’s Common Crawl, an open-source project that performs large-scale web scraping.

Common Crawl runs its crawls once a month, continuously updating its dataset. Think of it like searching for valuable things in a pile of digital garbage. However, this process isn’t just about collecting useful data—it also accidentally picks up sensitive information, including API keys, passwords, and confidential credentials.

Now, here’s the shocking part: this dataset, which is used for training large language models (LLMs), contains over 12,000 exposed API keys and passwords. That’s a massive security risk!

Requirements to Perform Common Crawl

If you want to perform a web crawl similar to Common Crawl, you need a powerful infrastructure because web scraping at such a large scale is resource-intensive. Here’s what you need:

1️⃣ High Storage Capacity The Common Crawl dataset is massive, often exceeding 400TB of data. You need a storage solution that can handle this amount—cloud storage like AWS S3, Google Cloud Storage, or large on-premise data centers.

2️⃣ High Computational Power A strong CPU/GPU setup is needed to process vast amounts of text and metadata. Distributed computing tools like Apache Hadoop or Apache Spark can help manage large-scale data processing.

3️⃣ Web Crawling Frameworks Popular tools for crawling include: Scrapy (Python-based web crawling framework) Heritrix (Common Crawl's official web crawler) Selenium (For rendering JavaScript-heavy websites) BeautifulSoup (For parsing and extracting HTML data)

4️⃣ Network Bandwidth & Proxies Large-scale crawling requires high-speed internet to fetch web pages quickly. You may need rotating proxies or a VPN to avoid getting blocked by websites.

5️⃣ Compliance with Robots.txt Websites use a robots.txt file to control how crawlers interact with their content. Ethical crawlers respect these rules to avoid legal trouble and unwanted scraping.

6️⃣ Database for Storing Data A NoSQL database (MongoDB, Elasticsearch) or distributed file storage (HDFS, Amazon S3) is needed to manage scraped data efficiently.

How Are These API Keys and Passwords Exposed?

Most leaks happen due to human errors and poor security practices. Users unknowingly post sensitive information on the internet, making it easy for automated scrapers (like Common Crawl) to capture them.

Here are some of the common ways this happens:

1️⃣ Hardcoding Secrets in GitHub Repositories Developers sometimes accidentally commit API keys in their source code and push them to public GitHub repositories. Attackers can easily scan these repositories using GitHub’s search feature or Google Dorking to extract sensitive data.

2️⃣ Exposed .env Files Environment files (.env) are used to store credentials safely. However, if a developer misconfigures their server, these files become publicly accessible. A simple Google search like this can reveal thousands of exposed .env files:

inurl:.env "DB_PASSWORD"

3️⃣ API Keys in Frontend Code (JavaScript, React, Angular) Some developers mistakenly embed API keys directly into frontend JavaScript code. Since frontend code is visible to everyone in the browser, attackers can easily extract API keys using browser DevTools.

4️⃣ Logging API Keys in Error Logs APIs often return errors when something goes wrong, but some applications log these errors along with the API key. If error logs are publicly available, attackers can extract these keys.

5️⃣ CI/CD Pipeline Leaks Misconfigured CI/CD pipelines (GitHub Actions, Jenkins, Travis CI) sometimes expose secrets in logs. Attackers scan public logs to steal API keys and tokens.

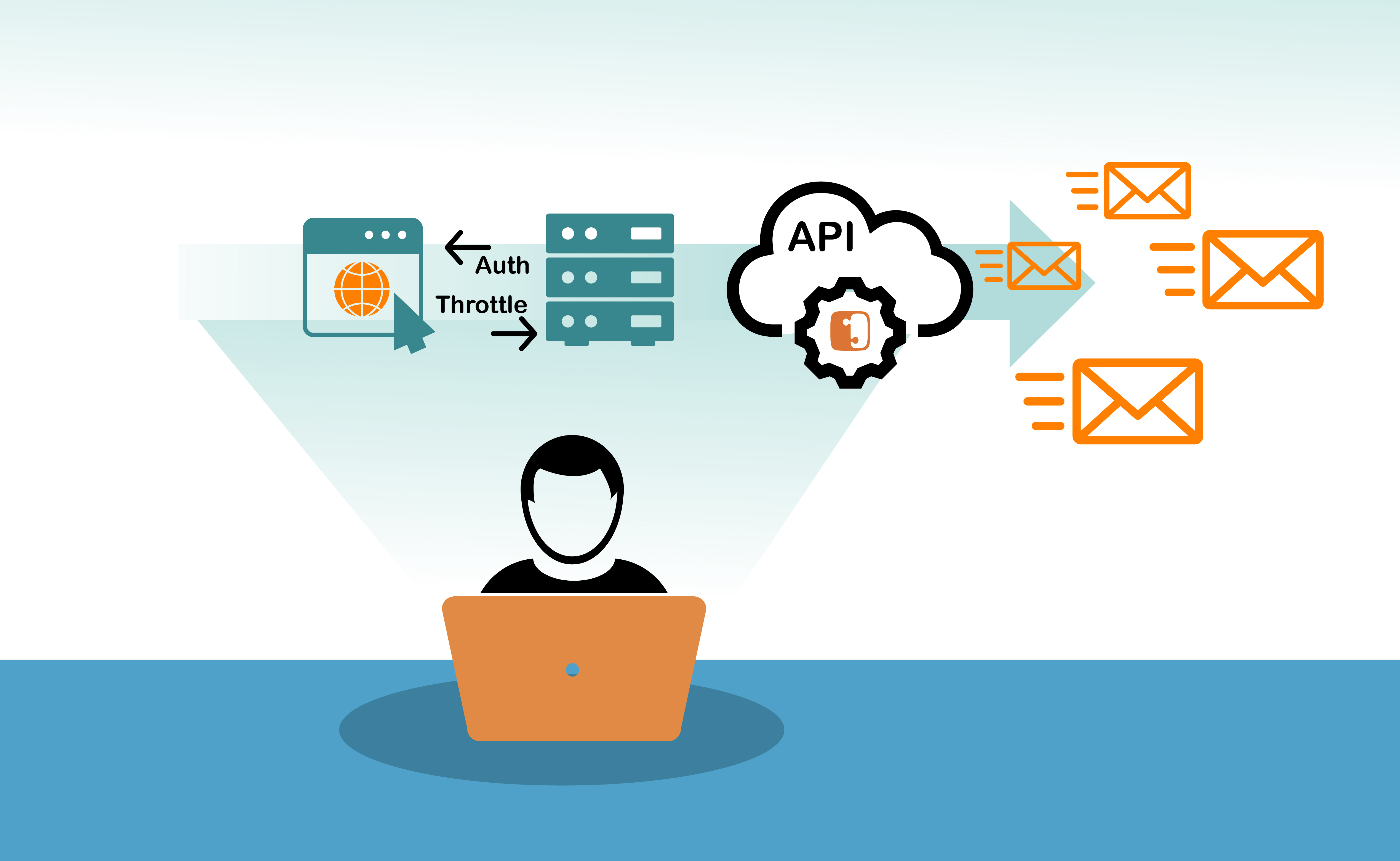

How Is LLM Training Involved?

Large Language Models (LLMs) like ChatGPT and other AI tools are trained on massive datasets scraped from the internet. These datasets include: ✔ Common Crawl data ✔ Public GitHub repositories ✔ Forum discussions (e.g., Stack Overflow, Reddit) ✔ Open-source documentation

Since these datasets aren’t always cleaned properly, API keys and passwords get mixed in with other training data. This means AI models could unintentionally memorize and regurgitate sensitive information when prompted in the right way!

For example, researchers have demonstrated prompt injection attacks, where an AI model can be tricked into revealing API keys found in its training data.

The Security Risks of Exposed API Keys When API keys fall into the wrong hands, they can be used for malicious purposes, such as:

⚠ Accessing private databases ⚠ Stealing user data ⚠ Making unauthorized financial transactions ⚠ Launching bot attacks on services

Companies have suffered major security breaches just because a single API key was leaked.

How to Prevent API Key Exposure?

To avoid these risks, developers and organizations must adopt better security practices. Here are some essential steps:

✅ Never hardcode secrets in your source code. Use environment variables instead.

✅ Scan your repositories for secrets using tools like GitGuardian or TruffleHog.

✅ Restrict API key permissions to limit access if a key is leaked.

✅ Regularly rotate API keys to prevent long-term exposure.

✅ Use GitHub Secrets for securely storing keys in CI/CD pipelines